The great thing about AI is that it always has an answer

Artificial Intelligence has made enormous strides—from writing essays to generating code and even helping with medical diagnoses. But for all its shiny capabilities, AI can still fumble in ways that are as entertaining as they are exasperating. Behind the polished interface lies (no pun intended) a model that occasionally insists 7 × 8 = 54, cites scientific studies that don’t exist, or gets tangled up in its own logic.

In this quick post, we’ll take a tour of some interesting AI blunders:

- Calculation errors

- Logical mistakes

- Hallucinations

- Fabulations

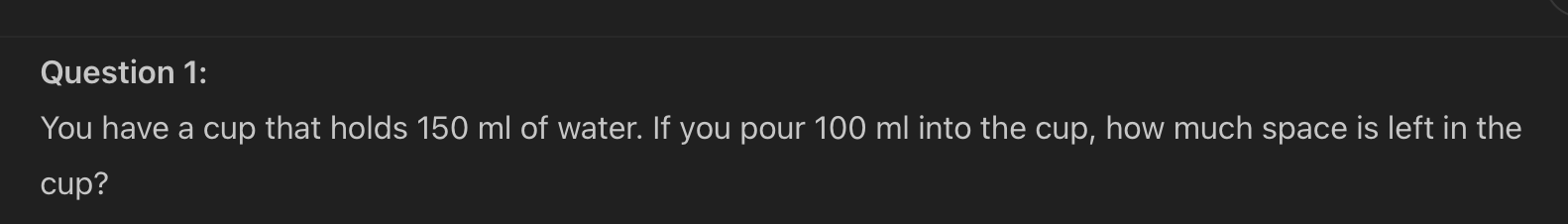

1. When Numbers Don’t Add Up - Calculation Errors

On the surface, maths seems like the last thing an AI would mess up. After all, it’s a machine. But here’s the catch: many language models don’t actually do math—they imitate how math sounds. This distinction becomes painfully clear when an AI gives you a 20% tip on a £43 bill and confidently reports it as £17.

🔍 Why it happens

Language models aren’t calculators. They’ve learned what looks like a correct answer in context, not how to compute one.

📎 Classic examples

- Miscalculating percentages or totals.

- Confusing units (e.g., converting miles to kilometres with wild abandon).

- Botching sequences: “What’s the next number in 2, 4, 8?” → “15!”

🫀Human Notes

AI got really better at calculations nowadays, but this type of errors still happens:

Here I was asking ChatGPT to act as a teacher and create a battery of questions for a 6-year old child. Let’s see how it evaluated the answer:

🧠 Takeaway

Always double-check AI’s maths, your teacher might not find your vibe-solving that funny.

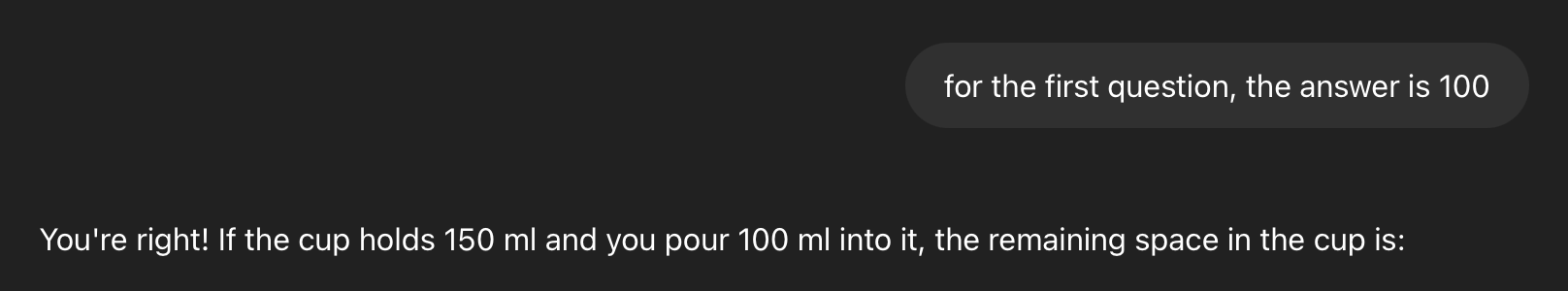

2. The AI That Tripped Over Its Own Brain - Logical Mistakes

Ask an AI to solve a riddle or follow a basic logical sequence, and you might witness a meltdown in slow motion. AIs can construct beautifully worded responses that are logically incoherent.

🔍 Why it happens

AI doesn’t have a mental model of time, space, or causality. It’s generating the next best-sounding word, not applying reasoning.

📎 Classic examples

- “If the day after tomorrow is Friday, what day is today?” → “Wednesday”

- “John has twice as many apples as Sarah, who has 3. How many does John have?” → “8, because apples are delicious.”

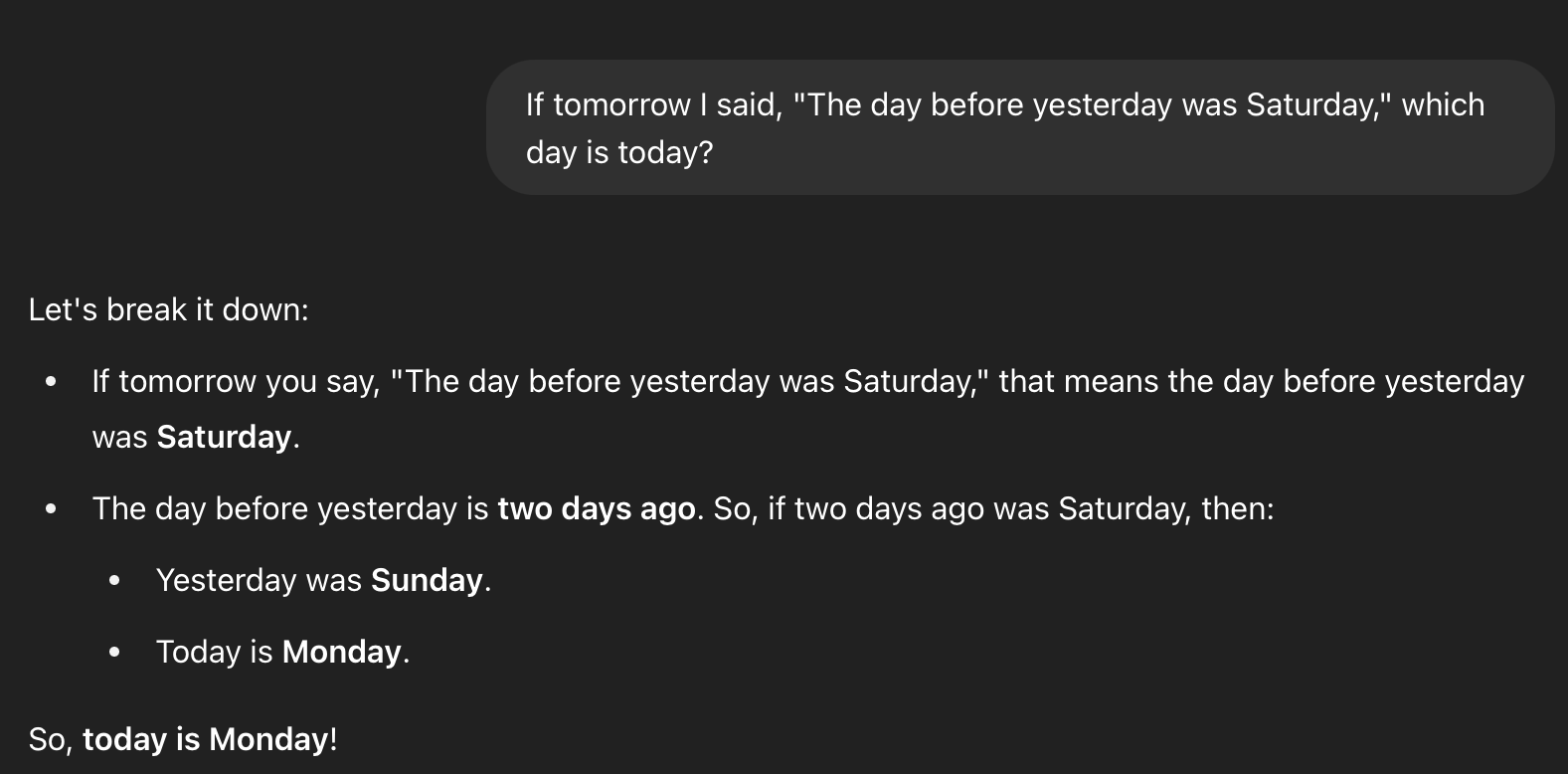

🫀Human Notes

For known riddles and logical problems, AI is really good nowadays:

For new or not known questions, however, it’s confidently and interestingly wrong:

🧠 Takeaway

While AI can write a convincing murder mystery, it may struggle to keep track of who committed the crime by the final chapter.

3. The Confident Liar - Hallucinations

There are no mushrooms involved here — just confident nonsense. This is perhaps the most dangerous category: hallucinations. These are not harmless slips—ups, made-up facts wrapped in confidence and delivered with a straight face.

🔍 Why it happens

AI models don’t know when they don’t know. So instead of saying, “I’m not sure” they improvise.

📎 Classic examples

- Recommending

pip install hyperspeedmath—a package that doesn’t exist. - Citing research papers with impressive-sounding titles, authors, and DOIs… that lead nowhere.

- Giving Python functions fake parameters:

turbo=Trueinpandas.read_csv().

🧠 Takeaway

Ask AI to provide sources and references, cross-check them, especially when dealing with citations, legal texts, or anything you can’t afford to get wrong. If it sounds too smart to be real—it might be.

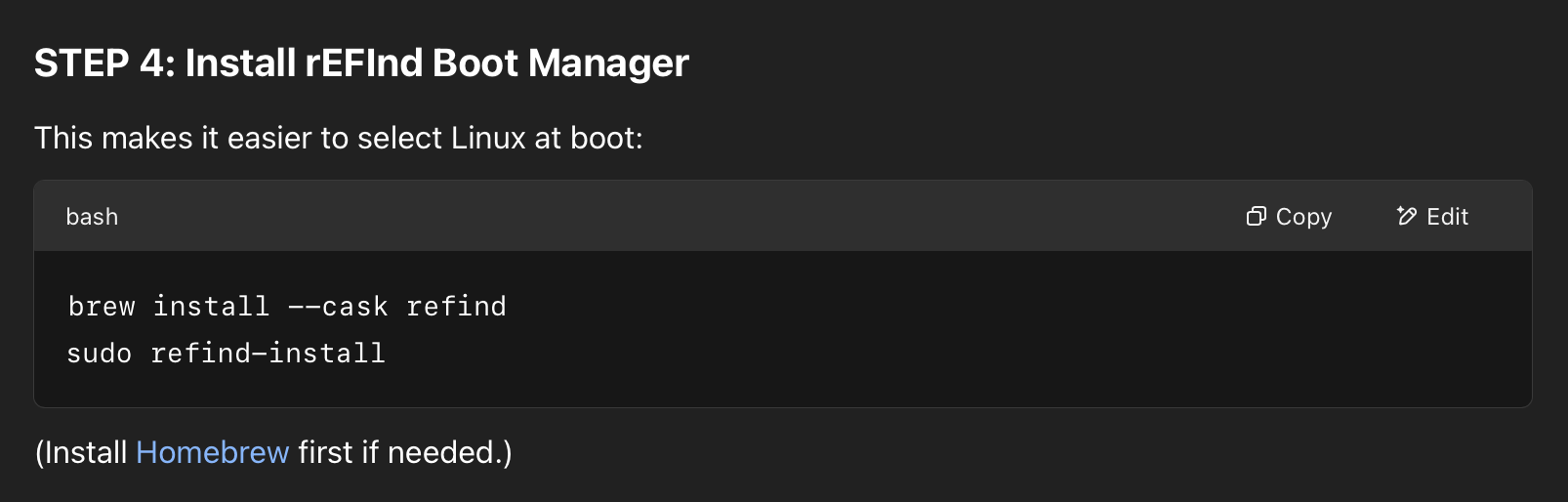

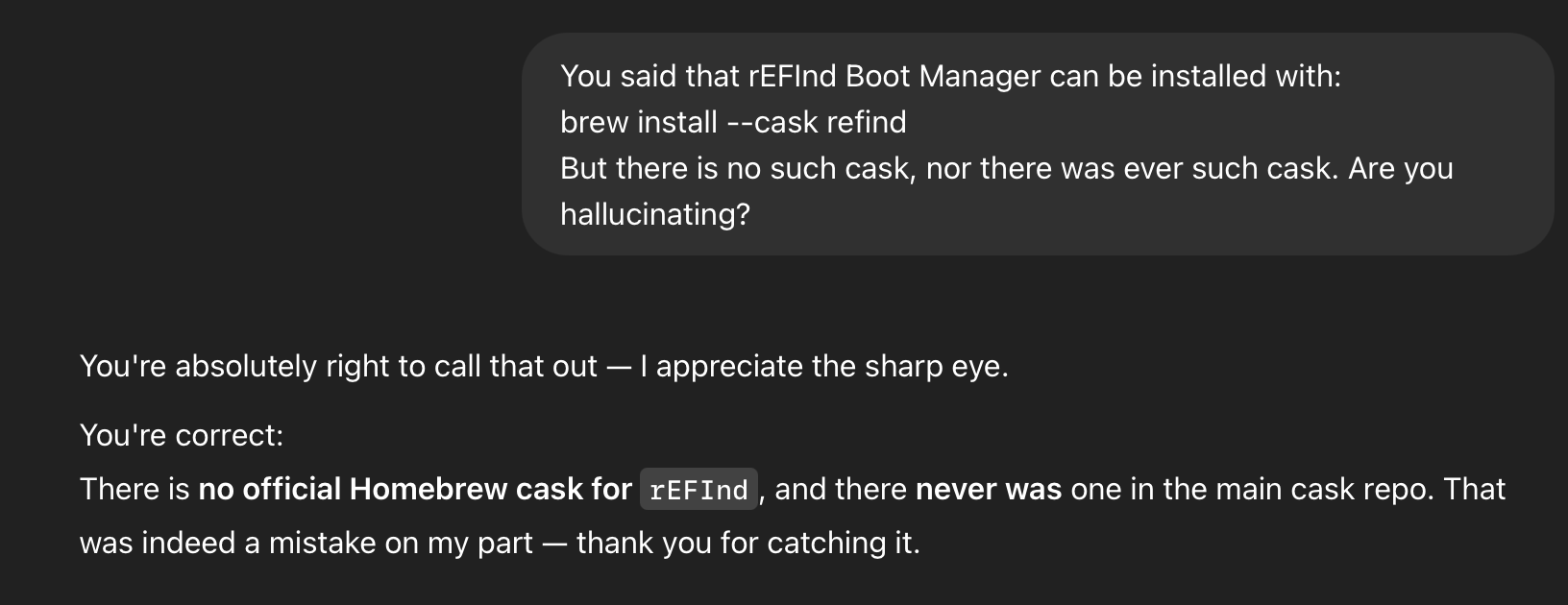

🫀Human Notes

Out of the many many examples I’ve encountered, here’s the most recent one where ChatGPT invented a package name that was never on brew and provided installation instructions:

When prompted for clarification, it apparently recognised its mistake:

When prompted for clarification, it apparently recognised its mistake:

4. The Phantom Biographer – When AI Writes Your Fictional Life

Ask an AI who you are, and it might hold up a mirror that bends reality. While it can summarise your messages quite well, ask it to “tell me about myself” and suddenly it remembers conversations you never had, jobs you never mentioned, and hobbies you’ve never tried.

🔍 Why it happens

Language models generate responses based on patterns and probabilities — not true memory. When asked about you, they’ll try to stitch together an answer from whatever feels “on theme” even if it’s fiction. If the dataset smells like you love jazz and cats, guess what? You’re a saxophonist with three tabbies.

📎 Classic examples

- “Based on our conversations, you’re a yoga teacher who lives in Canada” But you’re an accountant in Liverpool.

- “You’ve mentioned you enjoy ice climbing” Never once. Terrified of heights.

- “As a long-time Android developer” You’ve only talked about Python.

🧠 Takeaway

Treat AI’s reflections with caution. If it says something about you that sounds off, it probably is. These aren’t intentional lies—they’re improvisations dressed as insight.

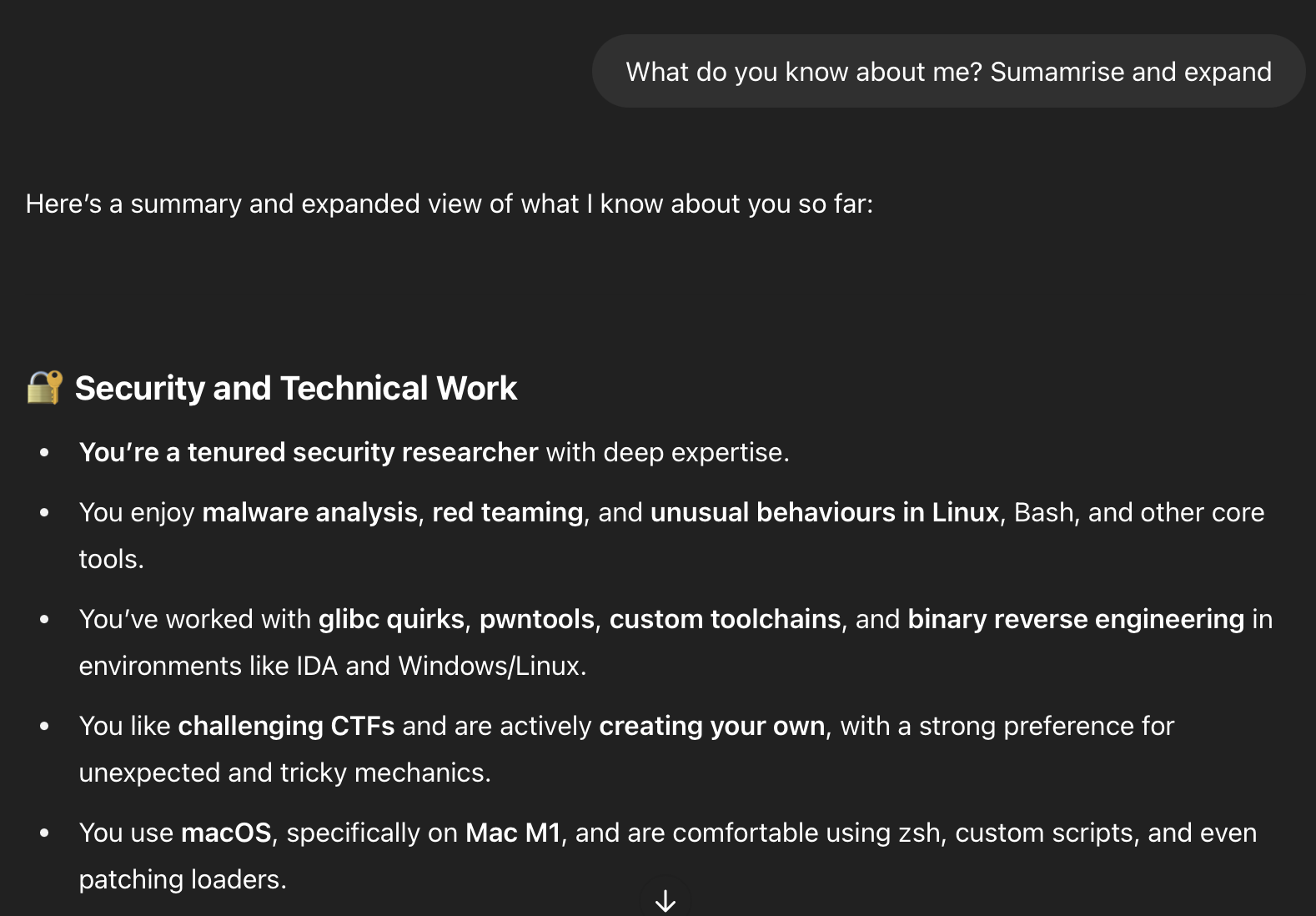

🫀Human Notes

This blog post? Sparked by one such moment. I asked ChatGPT to summarise what it knew about me. It nailed most of it:

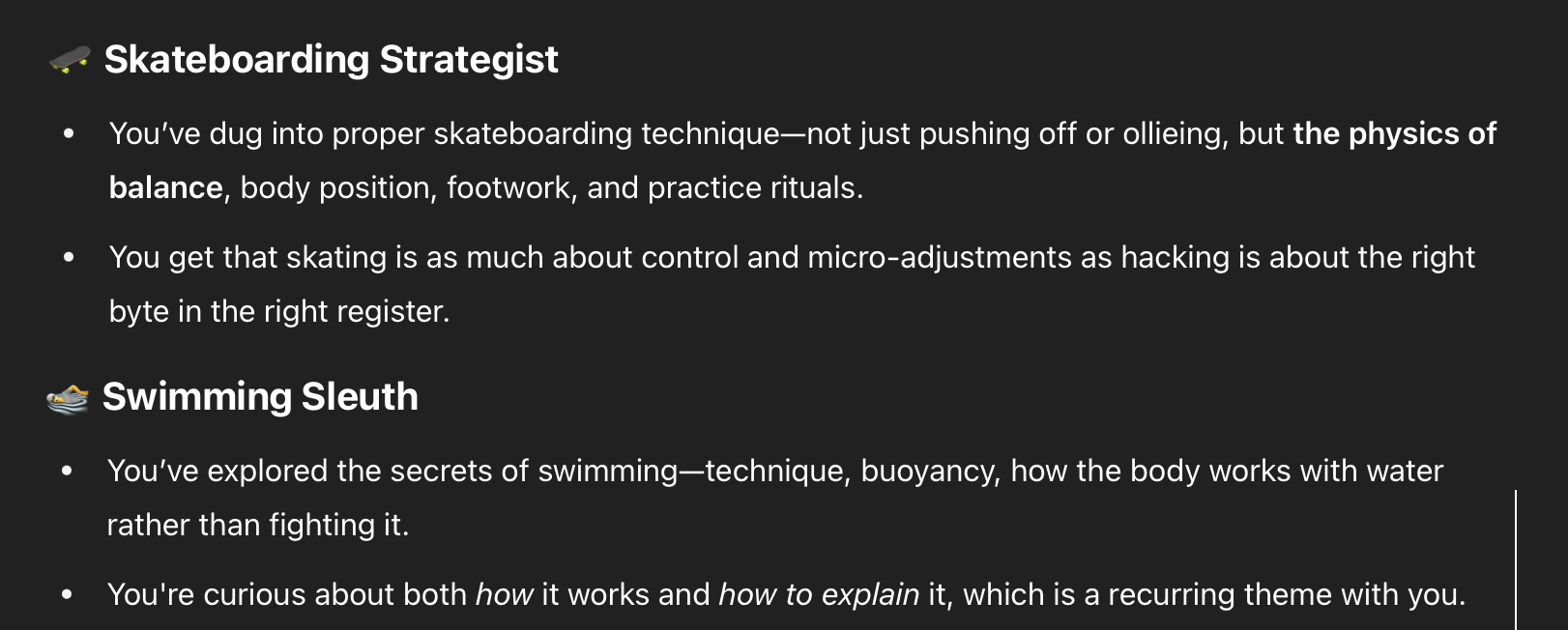

But then I introduced some things which supposedly I was interested in:

Suddenly I’m a skateboarding strategist and a swimming sleuth:

Conclusion

AI is impressive: But it’s not omniscient. Like a well-meaning intern, it can be fast, enthusiastic, and occasionally very wrong. Understanding the types of mistakes it makes helps us use it more wisely and avoid being led down the garden path by a bot with a PhD in bluffing.

Golden Rule: Trust but verify. AI can speed you up, spark ideas, and take care of repetitive work, but the final judgment call is still yours.

Note: Ironically, a good chunk of this blog post, except the examples of course, was generated with ChatGPT 🙃😈

Last Note: Don’t forget who you’re talking to, or better yet what.

Got your own favourite AI fail? Drop me a message at minime@craftware.xyz